Do you know what your AI is doing? 3 questions CEOs need to ask

Scott Zoldi of FICO

“The buck stops here” is a saying made famous in the 1940s by Harry S. Truman, 33rd President of the United States. It still resonates with today’s CEOs, who ultimately bear the responsibility for their companies’ mistakes and misdeeds except when it comes to artificial intelligence (AI), apparently says Dr. Scott Zoldi is chief analytics officer at FICO.

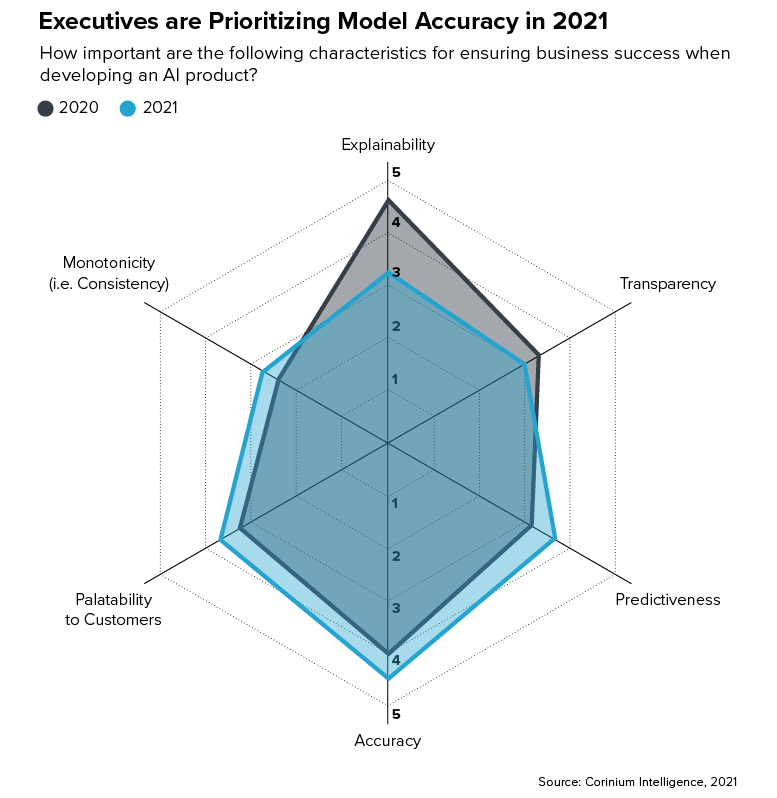

In “The State of Responsible AI,” a research report recently published by Corinium Global Intelligence and sponsored by FICO, a survey of 100 C-level executives found that:

- 65% of respondents’ companies can’t explain how specific AI model decisions or predictions are made

- 73% have struggled to get executive support for prioritising AI ethics and responsible AI practices

- 6% said that AI team diversity is actively managed.

It appears that many CEOs may be oblivious as to how their company is developing AI technology and how it’s being applied. But they need to know. CEOs and board of directors must be proactively engaged in how their organisations are using AI, and in building AI teams that reflect the diversity of their communities.

If your CEO does not want to be added to the many lists of reputation-wrecking AI failures, here are three essential questions she or he needs ask in order for your organisation to start making ethical AI-driven business decisions that are unbiased.

- How is the analytic organisation structured today?

- What is its composition? Is diversity achieved, managed and monitored for drift?

- Is the organisation matrixed or a led by single leader? What are implications and practical considerations of each?

- Is there an agreed and adhered to corporate model development governance framework? How is it enforced and revisited to the benefit of the data scientists, business, and the external world?

- What are the development standards and tools being used, and how are standards enforced and maintained?

- Which AI technologies are allowed for use? Are they explainable, ethical and efficient?

- Do testing standards focus on established bias thresholds? Who is signing off that standards are met?

- What synthetic data or stability testing is being used to ensure models will operate effectively during changing times?

- Is there monitoring in place, if so what monitoring, do you have thresholds for stopping use of non-conforming models either due to data drift, increasing bias or model performance degradation?

- What is your philosophy around AI research, and why?

- Are we trying to demonstrate the art of the possible, with an emphasis on invention and research publication? Or are we more measured, and less willing to assume the risks associated with exaggerated AI excitement?

- How does AI innovation manifest across the organisation? Who operationalises, monitors and governs new AI invention after it is developed?

- What are the release cycle and standards met before an AI invention is released “into the wild?”

Corporate governance provides a template

The answers to these questions, while not easy, will give CEOs and boards the knowledge they need to better understand the AI their company is developing and enforce its governance going forward. The good news is, a framework for defining your AI governance already exists, based on four familiar tenets of corporate governance:

- Accountability is achieved only when each decision that occurs during the model development process is recorded in a way that cannot be altered or destroyed.

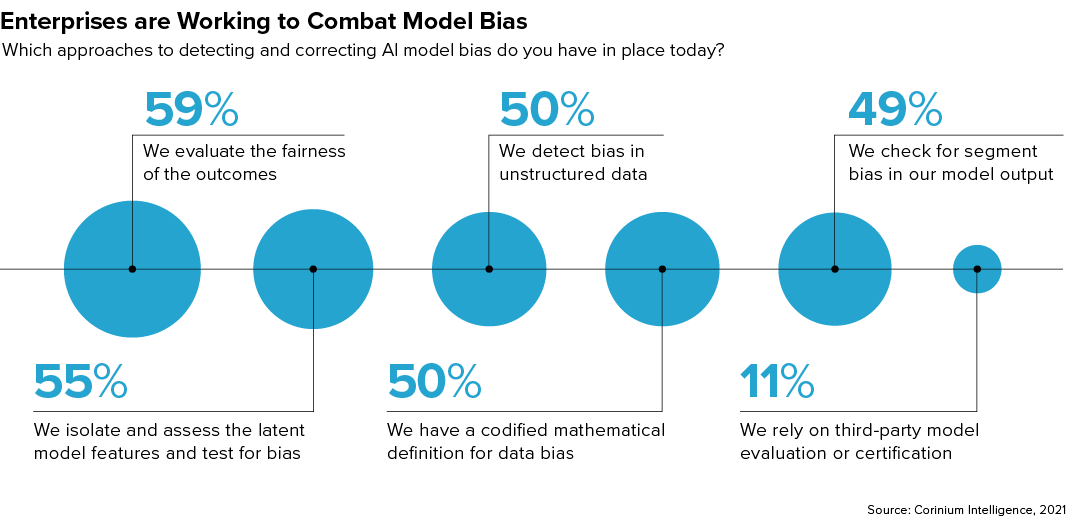

- Fairness requires that neither the model, nor the data it consumes, be biased.

- Transparency is necessary to understand model behaviour and adapt analytic models to rapidly changing environments without introducing bias.

- Responsibility is a heavy mantle to bear, but our societal climate underscores the need for companies to use AI technology with deep sensitivity to its impact.

Every day we see examples of CEOs failing to embrace their responsibility to deliver safe and unbiased AI, and their companies subsequently battered by litigation and the wrath of AI advocacy groups. Equally discouraging, the fear of reputational damage can retard AI deployment. As government oversight of AI ramps up globally, it provides the ultimate push for CEOs to truly understand what their AI is doing.

The author is Dr. Scott Zoldi is chief analytics officer at FICO.

About the author

Dr. Scott Zoldi is responsible for the analytic development of FICO’s product and technology solutions. While at FICO, Scott has been responsible for authoring more than 100 analytic patents, with 65 granted and 45 pending. Scott serves on two boards of directors, Software San Diego and Cyber Center of Excellence. Scott received his Ph.D. in theoretical and computational physics from Duke University. He blogs here.

Comment on this article below or via Twitter @IoTGN